How my web analytics project is able to handle hundreds of thousands of requests

One of the projects is an open source web analytics platform, it's sort of like Google Analytics or Plausible, but better and more usable. One of the problems in building this project was how to make it scale so that it could handle as much traffic as needed.

Initially it was hosted on a single VPS (and although it would probably still work fine), there was more and more traffic being tracked into Swetrix every day, so I wanted to make sure it would work smoothly even if there was a ton of incoming traffic.

Possible solutions

The first idea was to simply add more power to the machine (this is called "vertical scaling"). This approach would work fine for 90% of projects, but in my case it would be a single point of failure. This means that if it went down for any reason, the whole system would be inaccessible, which is not acceptable for a high-demand service. Also, such a solution would be too simple, and an engineer in my soul would want to create something more fun.

The other solution could be to split the whole codebase into microservices, each responsible for its own tasks, and use something like Kubernetes to deploy and manage them. This could reduce the load on each service, but it would be difficult to maintain such an architecture, as everything would now be an individual service and we would have to set up proper communication between them. Given that I'm mostly working on this project myself, it would drastically increase the amount of time I'd have to spend shipping something!

It would also lead to a lot of code repetition, make testing and debugging more difficult (remember that each service is not individual, so they all have their own sets of logs and coverage tests), and I'd have to rely on something like AWS to automatically scale it when needed and in the process I could accidentally bankrupt myself.

What have I settled for?

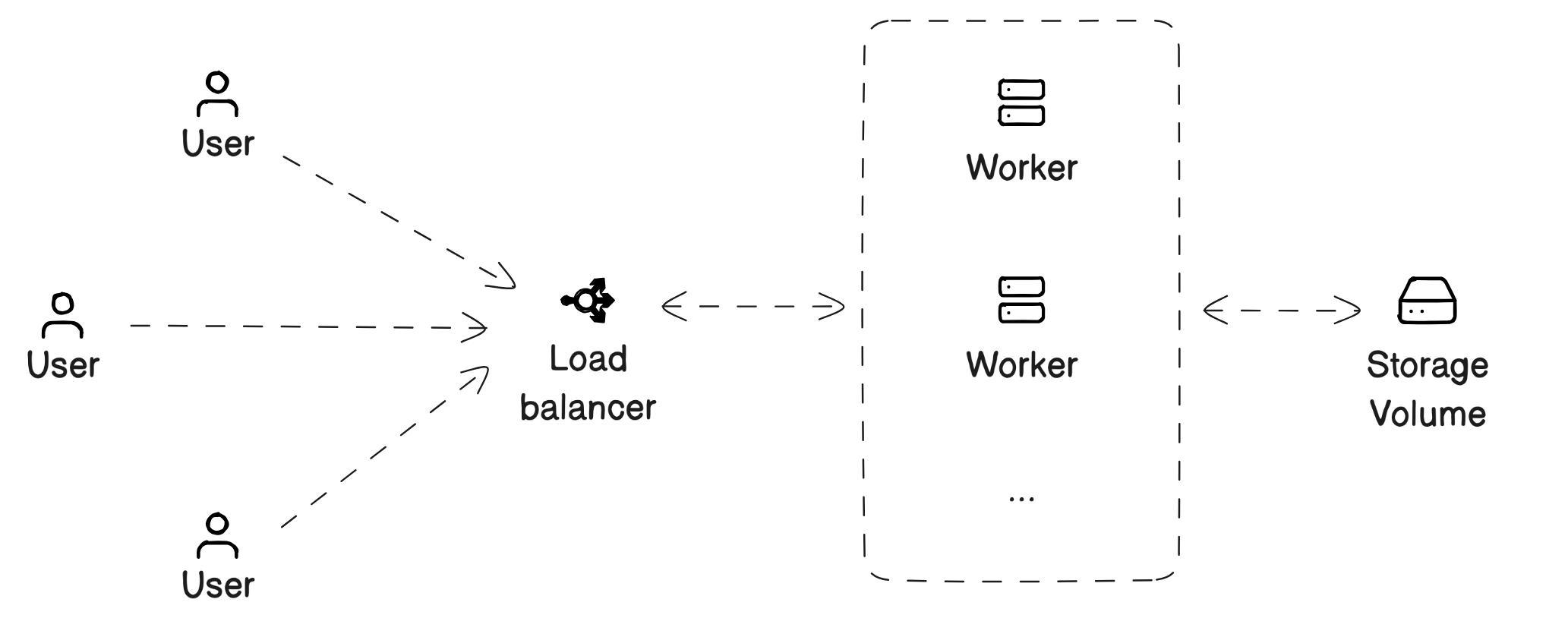

So, after considering possible solutions, I settled on the 'golden mean'. I decided to try and replicate our existing application with a few workers and host them on individual servers. So there would be a single server for each of the backend instances, and the database would also get it's own server.

This way I can always add more resources to a VM or simply add more servers (horizontal scaling) as needed. It also gives me more flexibility as I can stop, scale up or down any machine at any time.

Requests to Swetrix server go through a load balancer, which routes the request to the least busy worker. The load balancer is also responsible for managing the HTTPS certificates for the domain itself. If I wanted to do "white label analytics" where each customer could have their own dedicated dashboard, it would be easy to automate this process by assigning certificates with the load balancer and managing the dashboards with Traefik.

The database services and workers are now separate and communicate over a private network. This gives me even more flexibility because the storage service and workers are independent, and it also ensures that no one can access the database from the Internet because of strict firewall rules that block any outside requests. I can give the databases more available storage without having to scale the worker services. It also makes backups easier.

Workers are now assigned different roles - a master worker and a regular worker. They behave in much the same way, except that the master worker must perform scheduled tasks (preparing email reports or sending out alerts) to prevent the same task from being performed by workers.

And the services are able to communicate with each other using Redis, which is really helpful. As a result, the whole set-up costs me around £50 per month, whereas a similar setup on GCP or AWS would cost hundreds of pounds and headaches maintaining it.